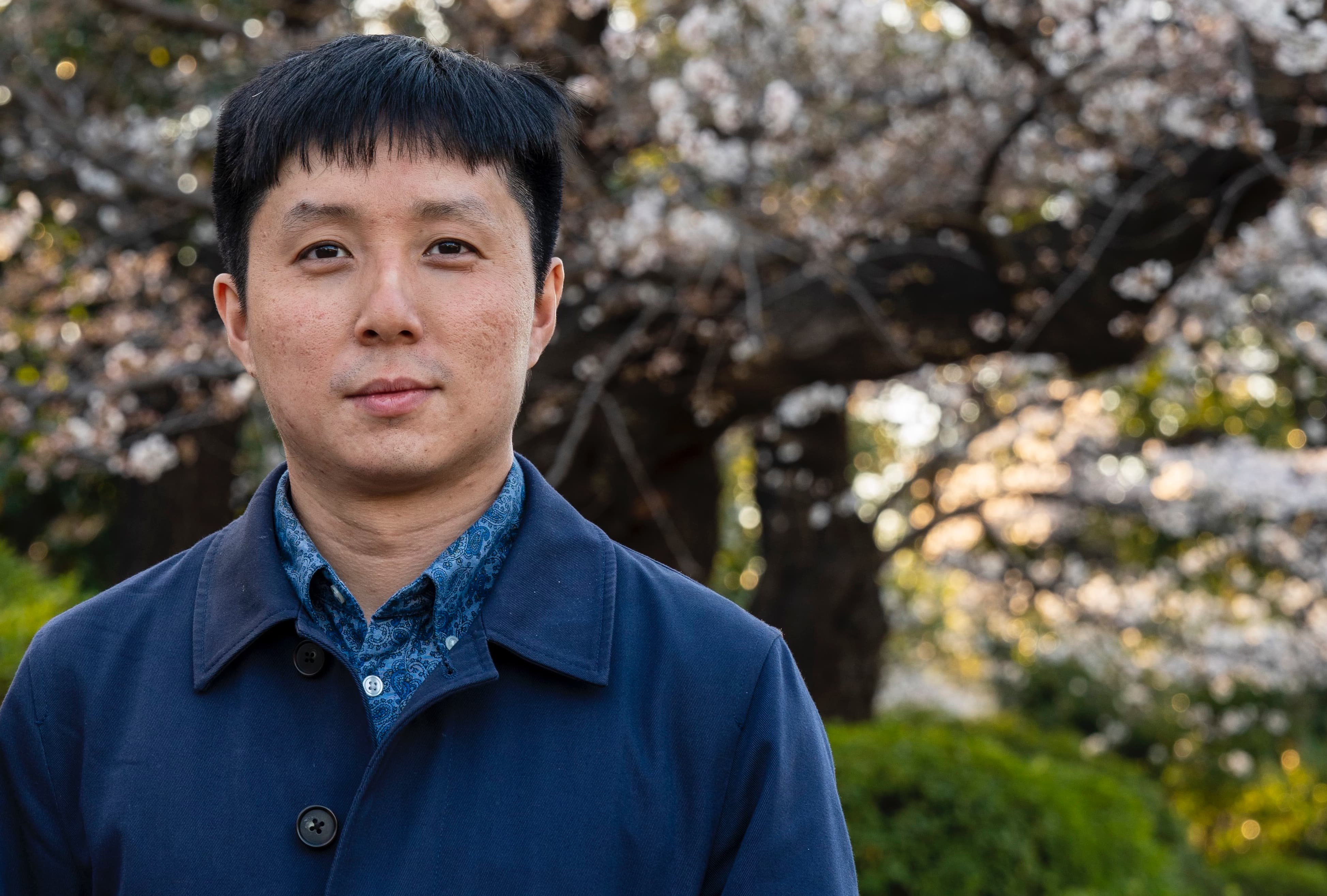

Hi! I'm Peter Lee

As a seasoned data engineer and DevOps engineer, I have a strong background in data infrastructure, data science, and backend development.

I have practical experience working with public clouds (GCP and AWS), and have a track record of successfully designing and deploying pipelines in on-premises environments.

Work

Kokomonster (TIOM AI)

Senior Backend Engineer & DevOps Engineer

2023 May -> Present

Taipei, Taiwan

A Hong Kong-based startup is developing an AI-driven app. The team works remotely across multiple countries, and I am responsible for the backend and infra.

Snapask

Data Engineer & DevOps Engineer

2021 Dec -> 2023 March

Taipei, Taiwan

Designed new data infrastructure on AWS, resulting in a 2x reduction in cost and a 3x increase in query speed. For example, I migrated Airflow 1.x to Airflow 2.0 with AWS MWAA and optimized the allocation of machines to reduce cost on the cloud. (EKS, MWAA, EMR, EC2, RDS)

Designed a log service for collecting custom log events, improving data collection reliability and reducing reliance on third-party analytics platforms.

I was responsible for maintaining and developing Terraform modules for our AWS infrastructure, which allowed us to manage our infrastructure as code (IaC). By using Terraform, we could easily provision and manage resources on AWS, such as EC2 instances, EKS.

Synchronized Kubernetes jobs between Flux CD and our Github repository, ensuring that the jobs were always up to date and any changes made in the repository were reflected in the cluster. By automating the synchronization process, we were able to reduce manual errors and save time in managing our Kubernetes infrastructure.

In data team, I am responsible for building up data infrastructures and designing pipelines. I have huge passion for learning new knowledge and sharing with members. In addition, I am expert in optimizing the performance of a system by introducing new technology.

Data Collection and Exchange:

1. Design different algorithms and pipelines for automatic data exchange with business partners.

2. Design the backend system for data collection of Data SDK. The system reduces the time to update data from a day to an hour and achieves a cost reduction of 40% for ETL machines.

3. Deploy Elastic Stack to validate and visualize data from each new client in real time.

Data Architecture

1. Introduce the Kubernetes Engine to automate the process of deployment and monitoring, significantly reducing the cost by 75% and the personnel expenses by 20 hours.

2. Provide advices about architecture design as well as deployment of Kubernetes with Istio, Cloud Function, Apache Airflow, Compute Engine, Serverless service and so on.

3. Develop internal bot notification system to provide instant error notifications of ETL states and Health Check Monitor.

Data Application

1. Build up a platform for highly customized tagging services. The platform gives each individual ID different kinds of tags such as personal interests, language, age, and gender and reports the tagging rate of each tag.

2. Optimize the pipeline of data update to directly generate files and reports and achieve a personnel expense reduction from 2 weeks to less than 1 hours.

3. Replace previous VM/EMR environment for ETL with Apache Airflow and Kubernetes CronJob to make ETL pipeline more flexible and cost-effective. I also design new tools for team, it shows the cost(size) of every query.

Continuous Integration and Deployment

1. Design the complete CI/CD pipeline including building Docker images, deploying to Container Registry, 2-hours deploying images on the Kubernetes cluster, and deploying images on application environment after conducting unit tests.

2. Build up Jenkins on the GKE and design related architectures to automatically run ETL pipelines each day.

Data Migration

Migrate existing projects and the ETL pipeline from AWS to GCP. Transfer data from Hive and S3 to GCP. Set up a Gitlab server in GCP. Build up ETL pipelines for effective and efficient data migration, which is very important for team members to access the latest data on GCP.

PDIS (Public Digital Innovation Space)

Software Engineer in (Alternative Military Service)

2017 Sep -> 2018 Aug

Executive Yuan 行政院, Taipei, Taiwan

Website: PDIS

PDIS is a government organization in Taiwan, it also has another name: Executive Yuan - Ministry of Digital - Audrey Tang's 唐鳳 Office. She leads the PDIS team to help our government. We incubate and facilitate public digital innovation for government.

1: As a software developer: I use Line BOT API to connect our internal systems to make an easy-to-use app to help our colleagues saving their time. I also designed API interfaces to allow other internal systems to hook up with our bot system.Up to now, it already bridged across our meeting reserve system and electronic bulletin board system.

2: About productive and quality: I designed a software that can display real-time subtitle during streaming. To meet the schedule, I took only one week to make it from idea to prototype. This system now works perfectly in all of our video conferences.

3: As a quick learner and contributor: I involved in many open source projects. Like Sandstorm(A open source private cloud with the container), Rocket.Chat(A open source chat app which like Slack). We have fixed many issues and add lots of features, and giving back to the community.

4: Eager to learn everything: when I have free time, I like to think about how to make the system more efficient. I still find more possibility to improve the current system. Like the subtitle display system, I would like to let some part of the system can automatically work. Let the computer can label the people with their name in real-time and display the information on the subtitle. On the road of development, I fixed various issues and bugs of Tensorflow. NVIDIA give me an award as the outstanding community developer in this April.

Selected Skills

Data

Kubernetes

Airflow

Spark

Elasticsearch

AWS

GCP

DevOps

CI/CD

Docker

Terraform

Flux CD

Python

Git

Backend/Web

Node.js

Python

Nginx

PostgreSQL

MySQL

MongoDB

Publications

電子情報通信学会-講演論文

信学技報, vol. 117, no. 184, SC2017-13, pp. 1-6, 2017年8月.

SC2017-13 2017-08-18 (SWIM, SC)

Selected Projects

This iOS app allows users to configure their iOS DNS settings with DNS-over-HTTPS or TLS encryption. It has sold more than 10,000 units in one year

Awards

2018 April - Outstanding-jetson-developer-community-contributions, Porting TensorFlow for NVIDIA Jetson.

Porting TensorFlow to NVIDIA Jetson

Repository tensorflow-nvJetson

TensorFlow #26985, #20025, #19075, #17394

Education

University Of Aizu, Fukushima, Japan.

2016 March - 2017 March

Master Degree of Computer Science.

Double Degree Program.

Tamkang University, Taipei, Taiwan.

2011-2014

Bachelor of Engineering (B.E.) Computer Science.

Embeded System Lab

2014-2017

Master Degree of Computer Science.

Cloud Computing Lab